Back to HomePage

Results

Copyright (C) 2014 by Amir Shachar ,Oren Renard and Saar Arbel

By Example Algorithm

In this algorithm we recieve an image, we degrade its quality and duplicate numerous copies

which we use as a data set. We then take this images and we use three masks two of which are

fixed for all images (decimation, optical blur). The purpose of the mask is to reverse the

actual blur and down sampling that occurred when the images were taken. After applying the masks

we get a vector of coefficients were the veriable themselves are the indvidual pixels in the image.

Now that we have a set of equations we use an optimizations algorithm (gradient descent) in order

to adjust our "guess" of the solution.

When the optimization is done we are guarenteed to have a mean square error that is lower

than an epsilon which we set for ourselves.

| Generated Dataset |

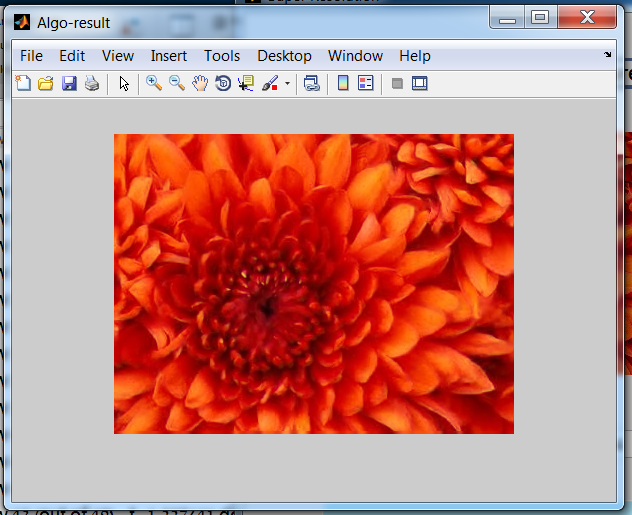

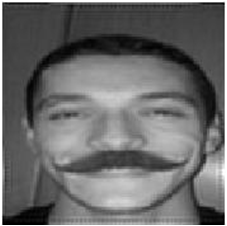

Super Resolution result |

Original high-res image |

|

|

|

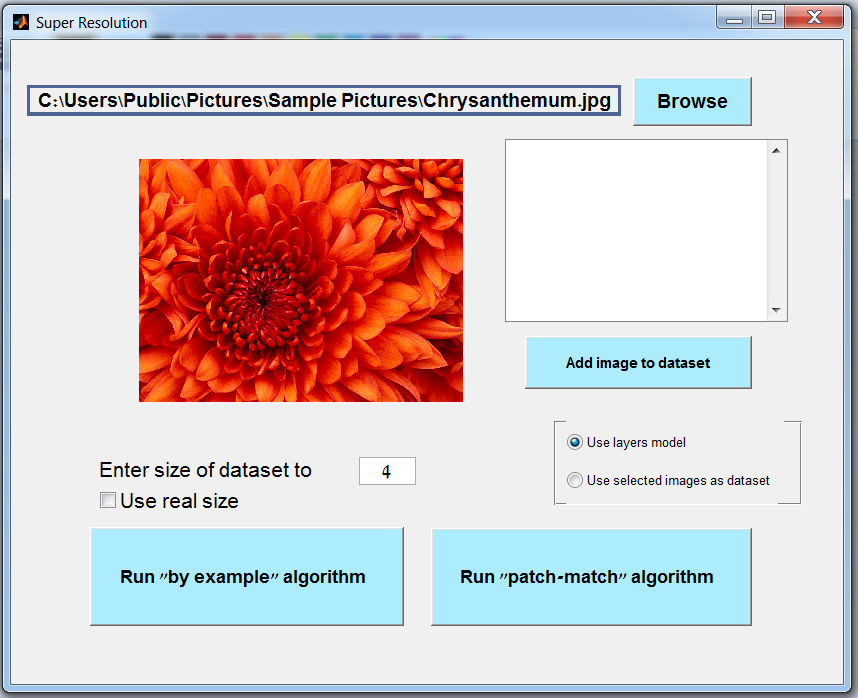

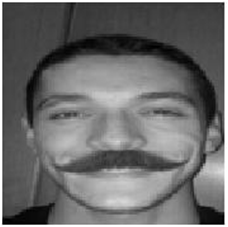

Patch-Match Algorithm

In this algorithm we recieve an image and then the user is faced with two options:

First option:

Layer model: this option is best for natural pictures such as landscape or animals.

In natural pictures there are often repetitions of patterns. The great advantage of this

algorithm for natural pictures is that it extracts the database it requires from a single image.

The image is streched to become larger or smaller, and many copies are put into the database.

then the algorithm studies the different patches. Here we can utilize the repetitions of patterns:

the algorithm needs pairs of patches (an small oatch, and an enlarged patch) and with the rescaling

that is exactly what it gets.

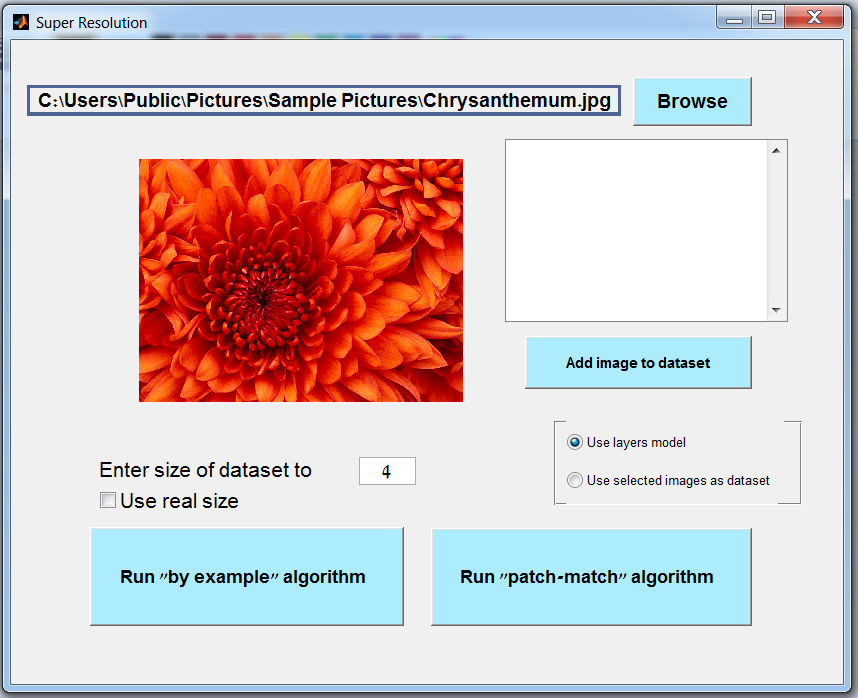

| Selected Database |

result |

|

|

Second option:

User-set database: This option is good when the photo is unique (meaning it shows something

unlikely or unnatural). The user chooses what images it supplies the database, thus making the

quality of the results entirely dependent on the user's input.

The rest of the program is carried out the same: the algorithm takes whatever database it

was given, and studies it. This part takes a long time (relative to the size and amount

of images) since there is need for decomposing the images into patches- a tedious

process. After the studying is complete the rebuild is begun. The program starts

to scope the inputted image and divide it into patches. For each patch it searches for the nearst match in the database, and takes its enlarged counterpart, uses it to build a new image.