Image Re-coloring

Colorization

by example

Reported by Ron Yano and Mariann Normatov, July

2014

Based on Colorization by Example, - R.Irony, D.Cohen-Or, and D.Lischinski, Eurographics symposium on

Rendering (2005)

What is this project all

about?

More information on the algorithm

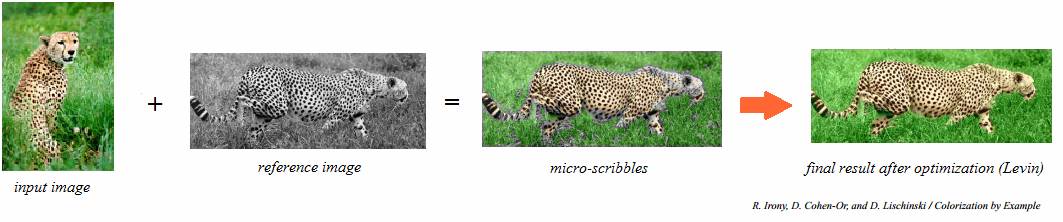

What is this project all about?

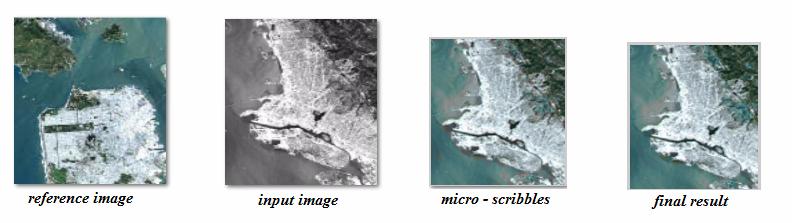

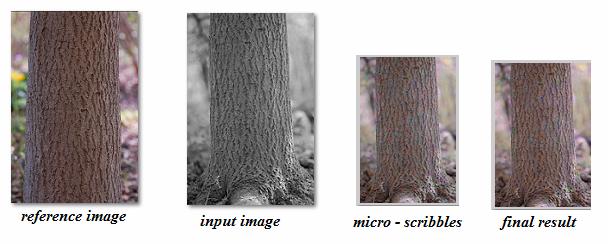

In our project we have implemented a technique

for colorizing grayscale images by example .Given the input grayscale image and reference

colored image (example), we are transferring the chromatic channels from the example to the grayscale image in

few stages process. The result is - providing to the grayscale image automatic

micro-scribbles as a preparation to the

final colorization stage based on Levin algorithm.

Usage

·

Color old “black and white”

pictures.

·

Re-color

images to different color plate.

·

Quick overview

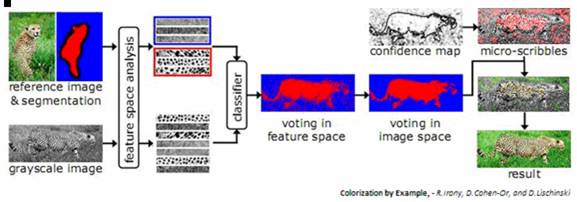

The reference image is segmented according to the different regions in the image. Each region is assigned a label and is used in the subsequent supervised classification scheme for segmentation of the input image. Features are extracted from the input and reference image. The input image regions are classified using a two step process, voting in feature space and in image space. Finally the colors with a high computed "confidence" are transferred from the reference image to the input image, and then fed as input to the optimization step.

·

Detailed solution

and approaches

Ø

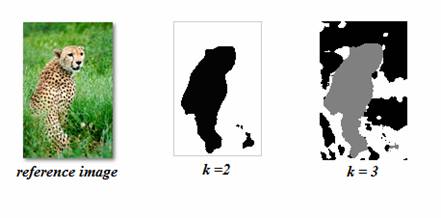

Input and

Segmentation

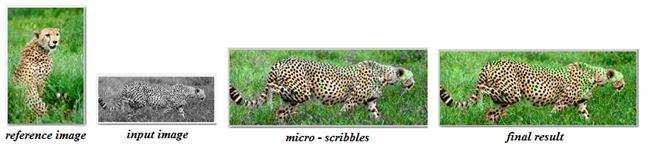

Two images of

cheetah illustrated above are chosen as the input and reference image

respectively.

The reference image

is converted to the luminance channel and then segmented by k-means algorithm,

given the parameter k.

Each segment is

associated with its own label.

Ø Feature Extraction

We compute DCT of kxk

neighborhood for each pixel in the reference image, matching it to its label

and create in a such way a feature space.

We also compute DCT

of each pixel in the input image, for classification stage.

DCT coefficients are simple texture

descriptor, which is not too sensitive to translation and rotation.

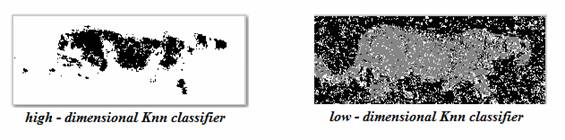

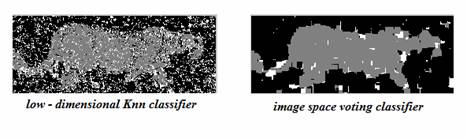

Ø Classification

We first classify a

novel feature vector by Knn algorithm using major voting method. Knn alg. is

applied on the previously

computed feature space. However, this

approach leads to many erroneous pixel classifications. Therefore we switch

using

unique technique to low-dimensional

feature space and only then perform the classification by Knn.

A low-dimensional

space switching approach, based on examining the differences between the

vectors within the same class

and differences between vectors

belonging to the different classes. This method is performed by applying

two-stage PCA

on the initial feature space. The advantage are illustrated below:

Ø Image Space Voting

As we can see,

still many pixels of input image are misclassified. To improve our results we consider

N(p), the kxk neighborhood

around a

pixel p in the input image. We replace the label of p with the dominant

label in N(p). The dominant label is the

label with the highest confidence value conf(p,l).

The confidence of pixel p with label l is defined as :  ,

when W denotes the weightings,

,

when W denotes the weightings,

which is

calculated as: ![]() .

The confidence conf(p,l) is typically high

in neighborhoods where all (or most) pixels are labeled l, and low on

boundaries between regions, or in other difficult spots. The improvement

are illustrated below:

.

The confidence conf(p,l) is typically high

in neighborhoods where all (or most) pixels are labeled l, and low on

boundaries between regions, or in other difficult spots. The improvement

are illustrated below:

Ø

Color Transform and

Optimization

After classifying each

pixel of the input image, the color of p is given by: ![]() ,

,

where ![]() is the nearest

neighbor of p in the feature space, which has the same label as p.

is the nearest

neighbor of p in the feature space, which has the same label as p.

As a final

stage, we optimized the color transfer by providing colorization only to pixels

with high

confidence value (conf

> 0.5).

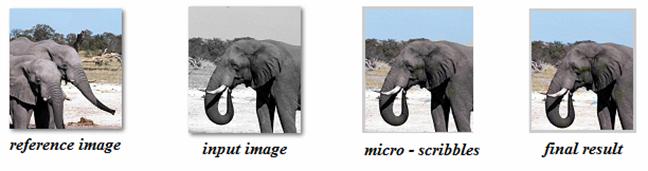

Results:

(a) Walking

Cheetah

(b) Smiling

Elephant

(c) Eating Zebra

(d) Satellite

Map

(e) Tree Stem

This

project implemented by Normatov Mariann and Yano Ron, Bcs. Students at Computer

Science Dep.,Haifa Uni.

This is the final project in course Computational Photography.

The project was supervised by prof.

Hagit Hel-Or,

More information on the

algorithm:

http://www.cs.tau.ac.il/~dcor/online_papers/papers/colorization05.pdf

http://webee.technion.ac.il/~lihi/Teaching/048983/Colorization.pdf

Copyright

© 2014 Mariann Normatov, Ron Yano.